OpenAI Facing FTC Investigation: Examining The Risks Of AI

Table of Contents

The FTC Investigation and its Implications

The Federal Trade Commission (FTC) investigation into OpenAI marks a pivotal moment in the regulation of artificial intelligence. While the specifics of the allegations remain partially undisclosed, the investigation reportedly centers on potential violations related to data privacy, algorithmic bias, and the potential for misuse of the technology. The implications are far-reaching, affecting not only OpenAI but also the entire AI industry.

- Specific allegations against OpenAI: The FTC's investigation likely delves into how OpenAI collects, uses, and protects user data used to train its large language models (LLMs). Concerns regarding the potential for biased outputs and the spread of misinformation through its models are also likely factors.

- Potential fines and regulatory changes: Depending on the findings, OpenAI could face substantial fines and be subjected to strict regulatory oversight. This could involve mandatory data security audits, restrictions on data collection practices, and limitations on the deployment of specific AI models.

- Impact on OpenAI's future development and deployment: The investigation's outcome will significantly influence OpenAI's future direction. It could lead to delays in product releases, increased costs associated with compliance, and a reevaluation of its data handling practices.

- Precedent set for other AI companies: This investigation sets a critical precedent for other AI companies. It signals a growing willingness by regulatory bodies to scrutinize the development and deployment of AI, potentially leading to increased regulatory scrutiny across the board.

The FTC's actions underscore the broader challenge of regulating AI – a rapidly evolving field that often outpaces the capacity of lawmakers to create effective oversight. Other major AI players are also under increasing scrutiny, highlighting the need for a comprehensive and proactive approach to AI regulation.

Data Privacy Concerns and AI

The training and use of large language models (LLMs) like those developed by OpenAI raise significant data privacy concerns. These models require massive datasets for training, often including personal information scraped from the internet or obtained through user interactions.

- Vast amounts of data and sensitive information leakage: The sheer volume of data used to train LLMs presents a substantial risk of sensitive information leakage. Even anonymized data can sometimes be re-identified, jeopardizing user privacy.

- Difficulty in anonymizing data effectively: Completely anonymizing data for AI training is incredibly challenging. Sophisticated techniques can often be used to re-identify individuals even when direct identifiers are removed.

- Potential for misuse of personal data: AI systems trained on personal data could potentially be misused for surveillance, profiling, or other harmful purposes.

- Applicability of data protection regulations: Existing regulations like GDPR (General Data Protection Regulation) and CCPA (California Consumer Privacy Act) are struggling to keep pace with the rapid advancements in AI, creating a regulatory gap.

The ethical implications of using personal data for AI development without explicit and informed consent are substantial. Robust data governance frameworks and transparent data handling practices are crucial to mitigate these AI risks.

Bias and Discrimination in AI Systems

A significant AI risk is the potential for AI systems to perpetuate and amplify existing societal biases. This can lead to discriminatory outcomes across various applications.

- Biased training data leading to discriminatory outcomes: If the data used to train an AI model reflects existing societal biases, the model will likely learn and reproduce these biases in its outputs.

- Examples of AI bias: Bias has been observed in facial recognition systems (often less accurate for people of color), loan applications (potentially discriminating against certain demographics), and even in language models (perpetuating gender or racial stereotypes).

- Challenge of identifying and mitigating bias: Identifying and mitigating bias in complex AI models is a significant technical challenge. It requires careful data curation, algorithm design, and ongoing monitoring.

- Importance of diverse and representative datasets: Using diverse and representative datasets for training AI models is essential for mitigating bias. This involves actively seeking out and incorporating data from underrepresented groups.

The social and economic consequences of biased AI systems can be severe, leading to unfair or discriminatory treatment of certain individuals or groups. Strategies for promoting fairness and equity in AI development must be central to responsible innovation.

The Spread of Misinformation and Deepfakes

AI technologies also pose a significant threat through their potential for generating and disseminating misinformation and deepfakes.

- Creation of realistic but false content: AI can now create highly realistic images, videos, and audio recordings that are completely fabricated. These deepfakes can be used to spread propaganda, damage reputations, or manipulate public opinion.

- Impact on public trust and political discourse: The ease with which deepfakes can be created poses a major threat to public trust and the integrity of political discourse. Distinguishing between real and fake content becomes increasingly difficult.

- Challenges in detecting and combating AI-generated misinformation: Detecting and combating AI-generated misinformation requires a multi-pronged approach, involving technological solutions, media literacy education, and collaboration between technology companies, governments, and civil society organizations.

- Role of social media platforms: Social media platforms play a crucial role in the rapid spread of AI-generated content, making it essential for them to implement effective detection and moderation strategies.

Developing effective strategies for media literacy and investing in technologies capable of detecting AI-generated misinformation are crucial for mitigating these AI risks.

Conclusion

The FTC's investigation into OpenAI serves as a crucial wake-up call, highlighting the significant AI risks associated with the rapid advancement of artificial intelligence. Addressing concerns around data privacy, bias, and the spread of misinformation is paramount to ensuring the responsible development and deployment of AI. We need robust regulations and ethical guidelines to mitigate these AI risks and harness the potential of AI for the benefit of society. Learn more about the evolving landscape of AI regulation and the ongoing debate surrounding AI risk management to stay informed and contribute to a safer future for AI.

Featured Posts

-

Denny Hamlins Popularity Soars With Michael Jordans Backing

Apr 28, 2025

Denny Hamlins Popularity Soars With Michael Jordans Backing

Apr 28, 2025 -

Almwed Me Fn Abwzby 19 Nwfmbr

Apr 28, 2025

Almwed Me Fn Abwzby 19 Nwfmbr

Apr 28, 2025 -

Mlb Game Recap Twins Edge Out Mets 6 3

Apr 28, 2025

Mlb Game Recap Twins Edge Out Mets 6 3

Apr 28, 2025 -

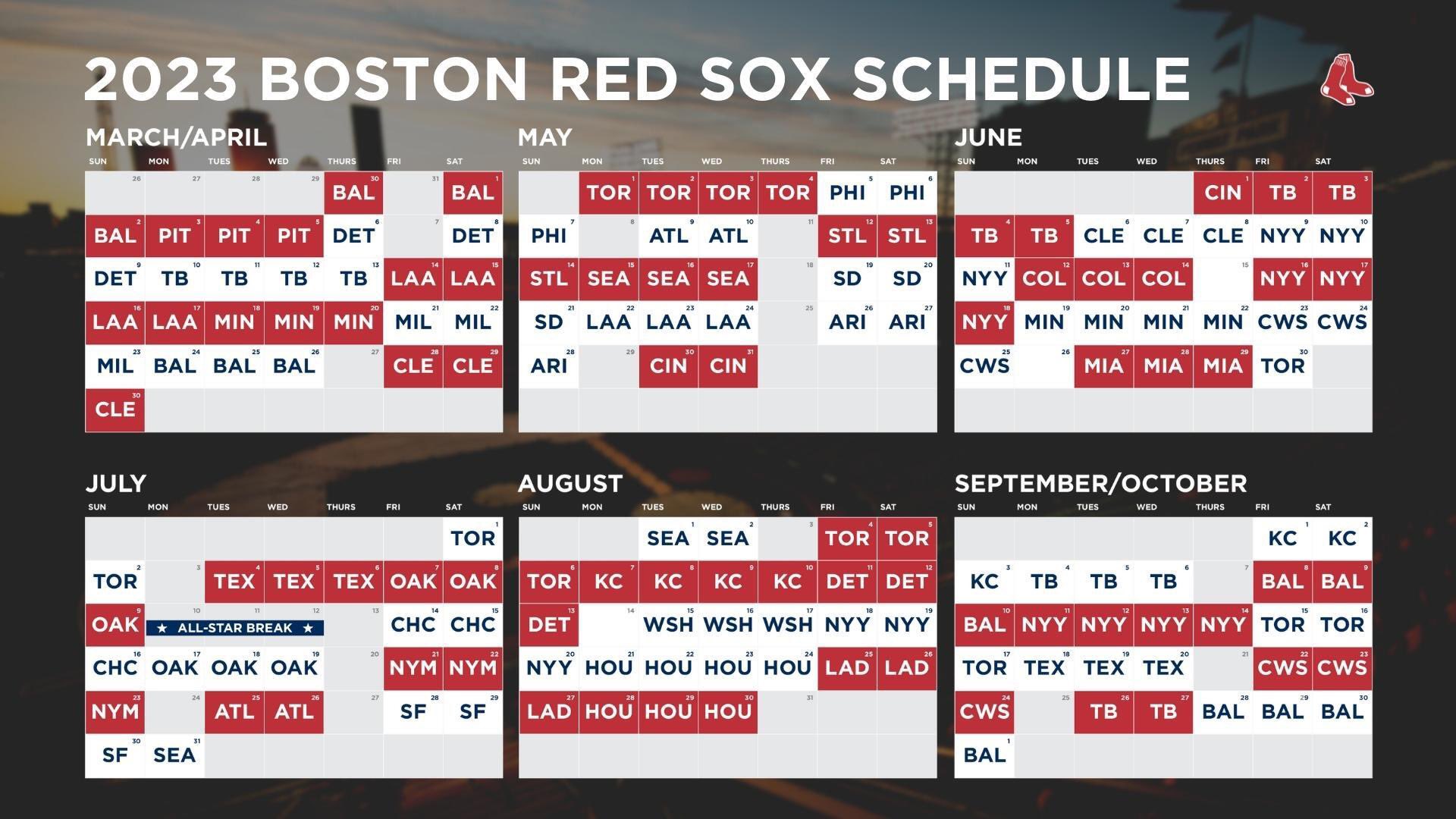

Espns Bold 2025 Red Sox Outfield Prediction Is It Realistic

Apr 28, 2025

Espns Bold 2025 Red Sox Outfield Prediction Is It Realistic

Apr 28, 2025 -

Newfound Edge Mets Starters Rise In The Rotation Battle

Apr 28, 2025

Newfound Edge Mets Starters Rise In The Rotation Battle

Apr 28, 2025